Top 3 Challenges of Bringing AI into Healthcare

Artificial intelligence (AI) is full of promise for supporting decision-making and improving care, but there are still a few challenges that need solving before it can be embraced across a wide spectrum of applications.

As the healthcare industry continues its journey toward full digitalization, provider organizations are increasingly eager to bring Artificial Intelligence and Machine Learning (ML) into their health IT ecosystems.

AI-based tools can process enormous volumes of data much faster and more accurately than any human being, identifying even the most subtle patterns in images, recordings, clinical test results, and financial or administrative data. By highlighting trends and predicting future events, these algorithms offer timely, actionable insights that may help improve decision making whenever and wherever it’s needed.

While the concept is fascinating, the operationalization of AI is still relatively new. As the technology evolves, it has the potential to transform the way healthcare is delivered and dramatically improve outcomes for patients.

Many challenges still remain before AI can truly augment decision making at scale, including questions of ethics, generalization, and how to best bring these tools into the clinical workflow.

Fostering Acceptance and Trust from Patients and Healthcare Professionals

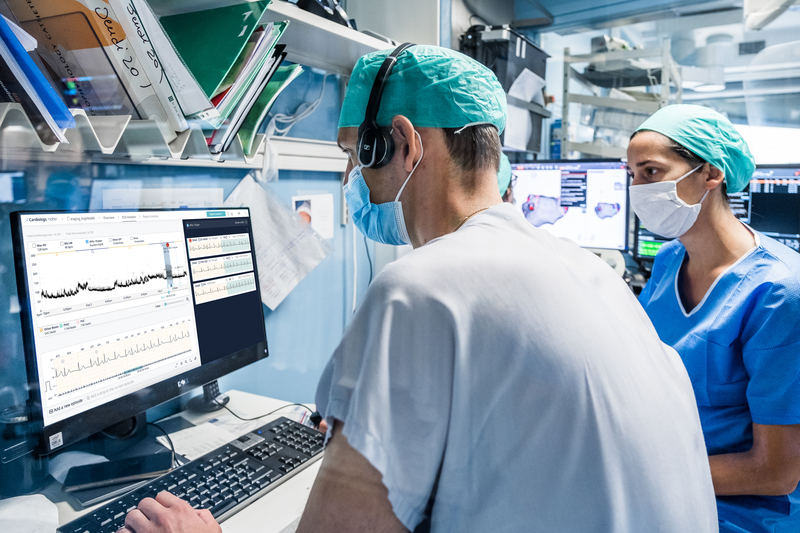

As AI becomes a reality, healthcare professionals and patients have mixed opinions about supplementing human decision-making with digital tools.

A recent Medscape survey found about 49% of US physicians are skeptical about integrating AI into their daily practice fearing that it will threaten their role as a healthcare provider, yet 70% believe it will make their decisions more accurate in the future. The general public largely feels the same: while some are excited over the potential, others don’t believe AI is ready for prime time or that algorithms should ever fully replace what humans currently do.

Only healthcare executives are fully on board with AI at the moment, with 98% of organizational leaders telling Optum that they already have a plan to bring AI into their health systems. 40% are primarily focused on using AI to support virtual care in order to be able to interact with their patients at a distance and provide remote monitoring.

Nearly all executive respondents believe they have a duty to ensure the technology is used responsibly within their organizations, which is in line with existing concerns about data privacy, security, and the ethical use of technology.

In order to provide an assurance that AI can be used appropriately without compromising patient privacy or clinical autonomy, organizations will need to work closely with their health IT partners to understand how AI tools are developed, the realistic limitations of the algorithms, and what happens to patient data when it interacts with the system.

Avoiding Hidden Biases and Improving Accuracy

Organizations can accelerate the acceptance of AI by choosing tools that are robustly designed with a focus on accuracy, adaptability, and inclusion.

Algorithms are typically trained on well-controlled data sets that may be limited in scope and variety, but real-world data is rarely as clean and uniform as these training files. A strong algorithm will be able to correctly ingest actual clinical data from diverse patients and return reliable, trustworthy results to the end user.

This generalizability is crucial for ensuring that AI tools are able to continuously learn and refine their decision support capabilities without perpetuating unintentional biases or missing key results.

Prospective adopters should consider the underlying design and training of AI algorithms before integrating the tools into their clinical care strategies. They should thoroughly examine the algorithm’s previous results and thoroughly test the tools on actual data to make certain they are appropriate for the use cases at hand.

For example, a recent study demonstrated that Cardiologs’ AI algorithm can predict the short-term risk of Atrial Fibrillation (AFib) based on single-lead Ambulatory electrocardiograms (ECGs) that show normal sinus rhythm. The algorithm reliably detected subtle symptoms that suggest future onset, giving much more value to the Holter and helping physicians determine which patients may benefit from long-term monitoring.

Integrating Artificial Intelligence into the Clinical Workflow

As trust and accuracy continually improve, more and more healthcare organizations will start to utilize AI across a variety of clinical and non-clinical domains.

Healthcare providers and patients will both need to adapt these new strategies, especially if Remote Patient Monitoring (RPM) devices are involved. RPM has been shown to improve satisfaction, patient self-management, and clinical outcomes. In one study, 85% of heart failure patients were more confident about taking care of themselves after adding RPM to their care regimens, while 52% reported improved communication with their physicians.

RPM devices backed by artificial intelligence may be able to build on these positive perceptions by offering predictive insights and detailed information about how and when to seek care for their conditions.

However, these tools will need to be paired with intuitive workflows that avoid increased burdens for providers. User interfaces should provide a streamlined, centralized place to review and interact with prioritized patient data. Reporting and alerts should be automatic to smooth the process of documentation and patient management.

Cardiologs’ latest clinical study showed that the AI algorithm dramatically reduced inconclusive results returned by the latest Apple Watch ECG companion app (Apple ECG 2.0). While the Apple Watch ECG 2.0 App yielded inconclusive diagnoses for 19% (19/101) of all SmartWatch recordings, Cardiologs’ deep neural network model reduced that number to 0% (0/101) while maintaining performance in accuracy, specificity and sensitivity.

These results offer great promise for using smartwatches to screen for irregular heart rhythms. By eliminating inconclusive results, Cardiologs can save physicians significant time spent on triage and analysis, and reduce the risk of missed, delayed, or incorrect arrhythmia detection.

Further reads:

Real-life application of Artificial Intelligence for ECG analysis